Ah right. It’s not gonna work on Windows (for now). I don’t think the main Max for Live device will work properly either. I may pass sound, but the underlying code isn’t fully compiled for Windows. This will change by the time it’s fully released, but that isn’t yet.

I will wait for the official release then!

Thanks for helping though. ![]()

Only moderately related, in terms of chasing latency, have you or anyone you know thought about analog / hardware-only options for processing the audio from the sensor?

After reading your post, I’m thinking about running thru (1) a carefully leveled preamp, (2) a compressor to kill that huge initial transient you show, and (3) some guitar pedals to make weird effects. If it’s interesting, I’ll report.

You’ll still need some phantom power, but your preamp can cover that. The pure onset detection happens really quickly (in Max), with the only latency being your vector I/O settings.

But could definitely be interesting to play with.

This is all really impressive @Rodrigo - awesome!

What exactly is the DPA 4060 doing here? I’d guess that the DPA 4060 is being used for some frequency domain analysis (brightness, noisness etc) hence sample selection, whilst SP offers onset detection? The reason I ask is the earlier note regarding IR correction given the SP offers a signal which is better suited to transient/onset detection (which isn’t surprising). It’s unclear why/if that is necessary for this application given you’re using the traditional mic and SP.

In this ‘Spectral Compensation Demo’, it’s not obvious to me whether you’re just playing the manipulated samples, or also the mixed/convolved/something-d DPA and SP inputs (I don’t know Max/MSP)?

For the audio analysis portion (which seems to use just the DPA input), I’d be interested if you’ve tested with contact mics or just crude piezo drum triggers. Then, also the same on mesh drumheads.

Looking forward to seeing where you go with this project!

In that particularly video I’m using both mics. The SP pickup for the transients only, and then the DPA 4060 for the audio analysis. It would still work without two mics, just in my case I always have the DPA on there anyways and it sounds worlds better than the SP one, and also gives me better audio analysis.

That being said, since I’m not processing the audio here, I’m only measuring relative loudness and spectral shape etc…, the quality of the mic isn’t massively important. Darker sounds will still be darker, brighter sounds will still be brighter etc…

The mic correction stuff I’ve done also mitigates that too since the SP pickup has a very unnatural “sound” to it, so using the corrected version for the audio analysis gives a more perceptually meaningful set of descriptors/analysis.

When I release the SP tools I’ll have a couple options so you can do the same thing with just a single SP pickup (I imagine most people will be in this boat), as well as having options for a secondary “analysis” mic for those are fancy.

This is a really shit/crude demo, but I wanted to show, roughly, what I meant by spectral compensation. What’s happening in that video is that I’m triggering the same sample over and over, and only changing the compensation by applying the spectral envelope of the hits on the drum (which we hear via the camera mic, but aren’t part of the audio playback) or little bursts of colored noise at the start.

As mentioned above, the analysis would work fine with whatever mics you through at it (even contact mics). Having some correction will make it more perceptually meaningful and give you better matching, but the premise is the same. I guess a metaphor might be using one of those motion of shape tracking cameras. It will work on a shitty webcam or a 4k mega camera, since it’s just tracking movement (though a lot more CPU involved for 4k in this example).

Bit of a bump on this as I had a great chat with @Giovanni_Iacovella a few weeks ago and it got turned into a project I have called Particle Castle Bubble Party!

We cover loads of topics, but center mainly around audio analysis, onset-detection, and using SP hardware within Max:

As a general update, I’ve not moved the SP Tools too much further (other than what I show in the video), but I’ve not forgotten about it! I’m just trying to improve some of the general classification stuff first (the core thing that the native SP software does).

Super necro bump here, but I’ve been working this again finally and wanted to pick the brains of people who would be potentially interested in working with this.

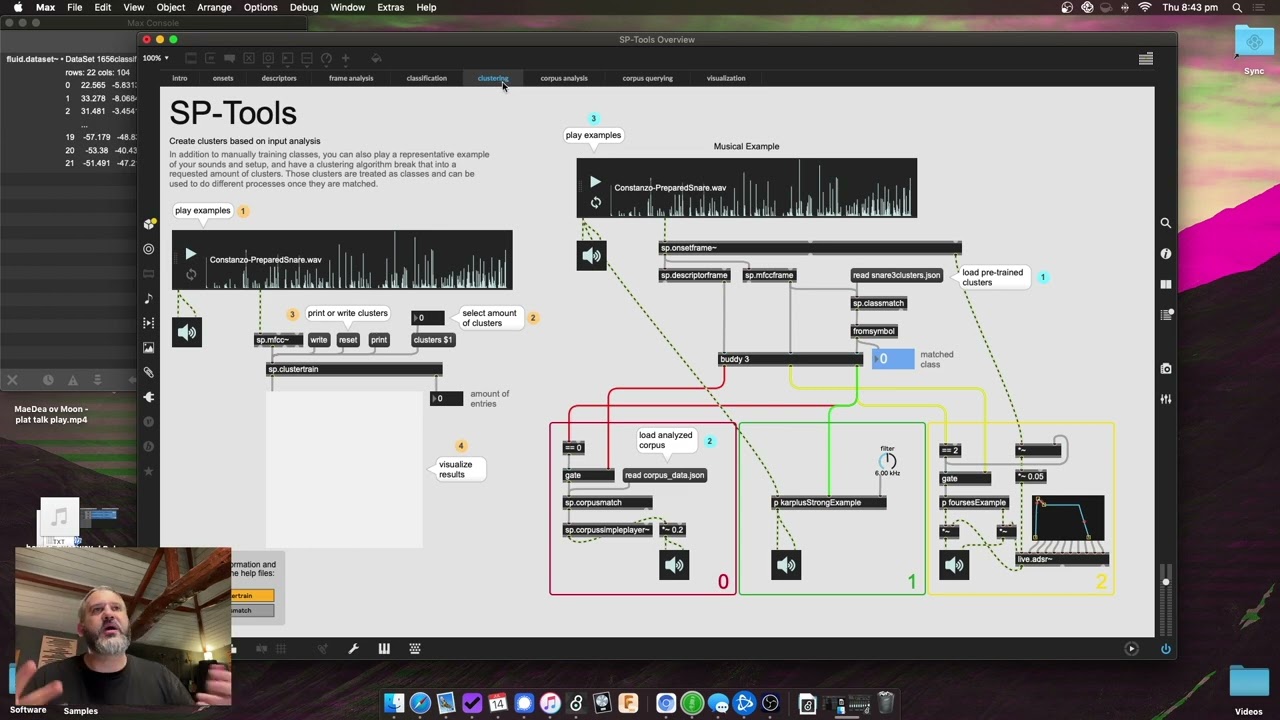

At the moment I’ve gotten a good classifier working (the core “learn/match” thing the SP software does), improved the overall analysis/descriptors, improved the spectral compensation stuff demo’d above and am building a couple Max for Live devices that do some “higher-level” things like the Corpus-Based sampler:

So far it’s going to be a bunch of semi-low-level abstractions that do specific tasks like:

sp.onset - tuned trigger (and gate) onsets

sp.descriptors - spits out onset descriptors (optional derivatives)

sp.mfcc - spits out mfccs

sp.classifier (matching only, read sp.train file)

sp.train (pick zone, learn on/off, write file)

sp.cluster (define zones from any amount of inputs)

sp.onsetframe (used to connect to other objects)

sp.controllers (spit out the speed/velocity/timbre variables)

sp.correction - convolved output (@drum snare, kick, tom modes)

The idea being that you could chain/connect some of these together and build systems to do stuff in Max with. With some of the pieces you can recreate the core matching/training things you can with the native SP software which is cool, but my interest in this is to push beyond that and let you do things that you couldn’t otherwise (e.g. the Corpus-Based sampler, mapping audio analysis/descriptors to parameters).

So if you’re a drummer who does stuff in Max and want to have a chat about how you may want to use it, drop me a line! (rodrigo.constanzo@gmail.com)

It’s mainly to see what some of the use cases may be, what’s missing (in terms of the SP software itself or the things listed above), how granular the system should be (low vs high-level) etc…

Ok, I have finally made the v0.1 (alpha I’d say at this stage) of the package, along with a couple intro videos.

Here’s a teaser vid of some of the things you can do:

Here’s an overview of the package:

And here’s where to download it:

Let me know what you think!

Wow, this looks incredible – look forward to digging in. Thank you!

Man, you did it! Amazing! This is the stuff I’ve been hoping to see, and might actually be the push for me to really dive into Max and learn it well. If you need any help with developing the tools, feel free to shoot me a message.

Big v0.2 update adding “controllers” (meta-parameters) and “setups” (scaling and neural network prediction).

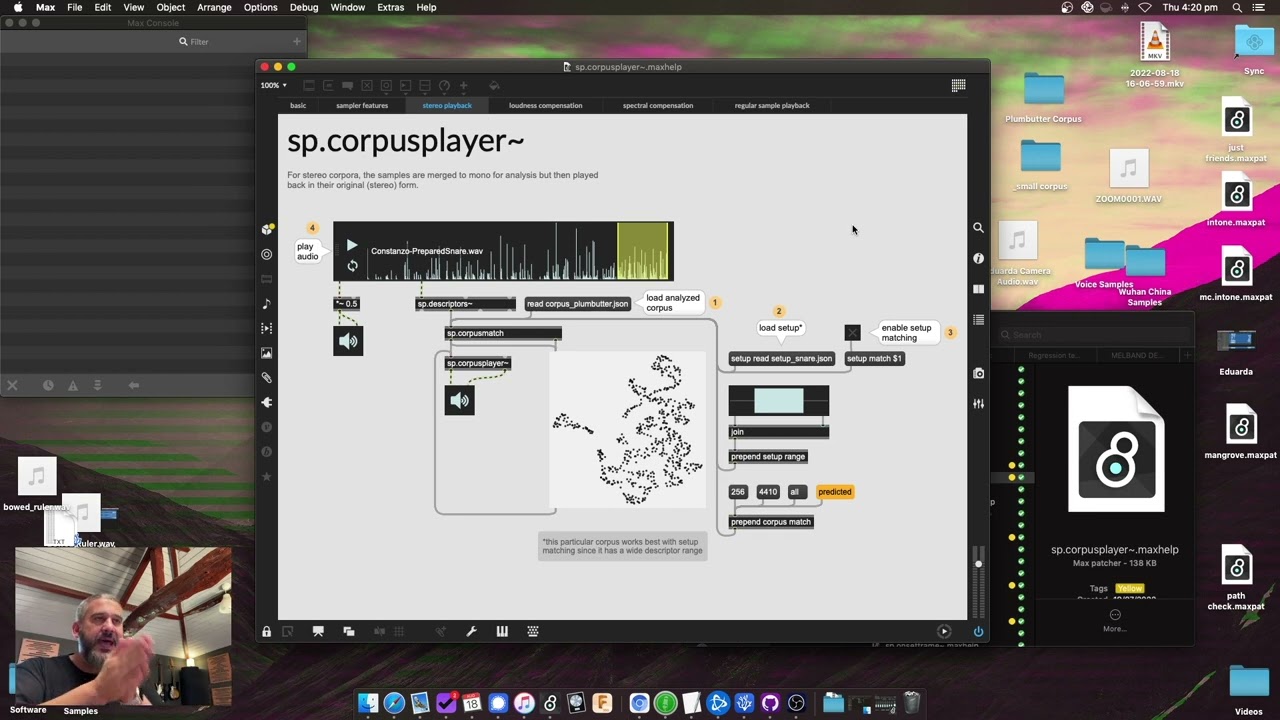

Another update (v0.3) which adds the ability to filter corpora by descriptors, completely revamps the underlying playback/sampler to be a lot more similar to the native SP sampler (start/length, attack/hold + curves, speed, etc…) and adds realtime descriptor analysis too:

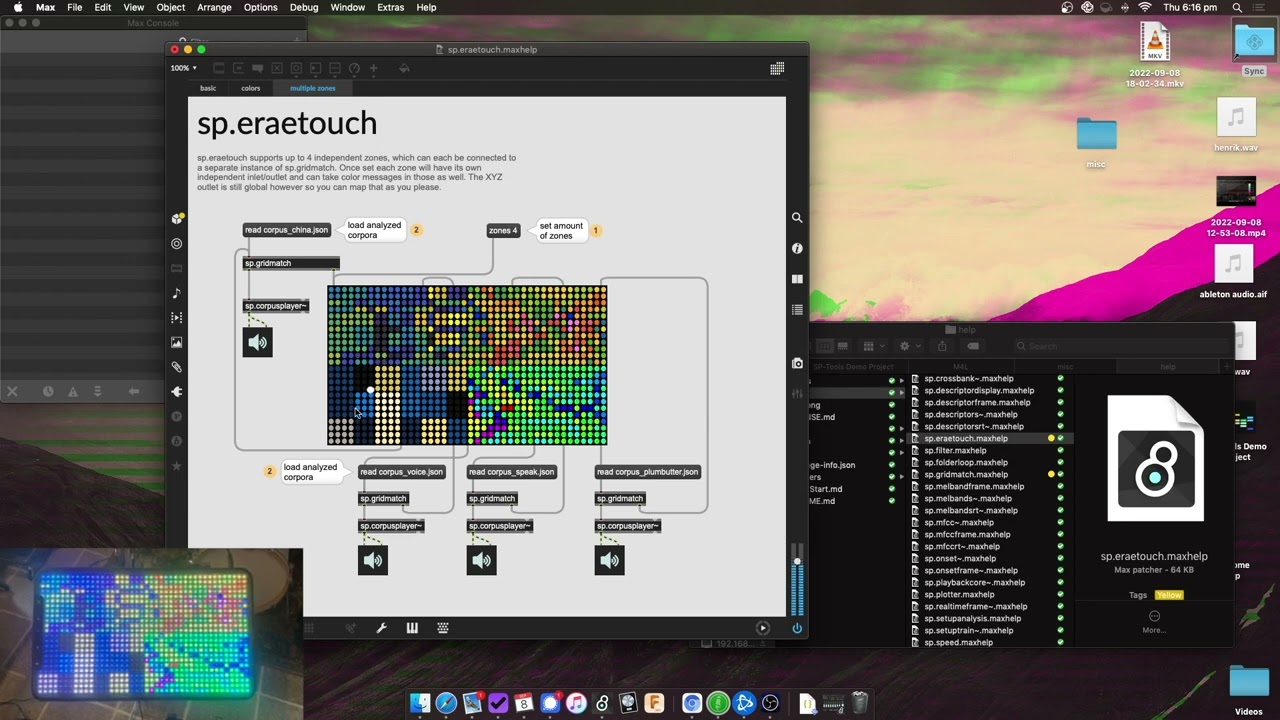

Don’t wanna bombard this forum with stuff, but there was a v0.4 update a bit ago, and today I’ve put out a v0.5 update which adds some Max for Live devices, so that should ease the entry point for many users as you can leverage some of the creative funny-business into your existing Live workflows.

Changelog

v0.5 - SP-Tools v0.5 Video Overview

- added Max for Live devices for some of the main/flagship functionality (

Concat Match,Controllers,Corpus Match,Descriptors,Speed) - added

sp.gridmatchabstraction for generic controller-based navigation of corpora - added support for the Erae Touch controller (

sp.eraetouch) - improved path stability when loading example corpora

Here’s the v0.6 update.

Massive update, particularly in terms of Max for Live. There’s basically 16 M4L devices now which cover (nearly) all the functionality of what you can do with SP-Tools in Max. You can obviously do more in Max, as that’s the nature of it being a coding language, but I wanted to make sure that you could do each of the types of things you can do with SP-Tools.

I’ve also done something different with this video where it’s a walkthrough as well as the typical overview where I walk you through the devices, and show how they work in context.

Here’s version v0.8!

This one’s been a long time coming and is the biggest update so far, adding a whopping 16 new abstractions and 3 new Max for Live devices (full changelog below).

There’s also a Discord server now for updates, sharing patches/corpora, new ideas, etc…:

Changelog

v0.8 - Sines, Synthesis/Modelling, and Documentation

- added synthesis and physical modelling objects (

sp.karplus~,sp.resonators~,sp.resonaroscreate~,sp.shaker~,sp.sinusoidplayer~,sp.sinusoids~,sp.waveguidemesh~,sp.lpg~,sp.lpgcore~) - added Max for Live devices for some of the new processes (

Resonators,Sinusoids,Waveguide Mesh) - added new descriptor type (sines) and corresponding objects (

sp.sineframe,sp.sines~,sp.sinesrt~) (you should reanalyze all your corpora) - added utilities for filtering and creating clusters of triggers and gates (

sp.probability~,sp.triggerbounce~,sp.triggercloud~,sp.triggerframe~) - added absolute start and length parameters to

sp.corpusplayer~(viastartabsoluteandlengthabsolutemessages) - added scramble transformation to

sp.databending - added slew parameter to

sp.speed - added ability to loop floats and ints (as well as conventional descriptors) to

sp.datalooper~ - added proper Max documentation via reference files, autocomplete, etc…

- added sample rate adaptation to all realtime and offline analyses. previously things were optimized and assumged for 44.1k/48k but now everything works at every sample rate (up to 192k)

- added some puredata abstractions and help files to the package (in the

puredatafolder)

Looks like that Kickstarter for the MIDI controller didn’t deliver. Which one did you end up going with?

Do you mean the OffGrid thing?

Was bummed about that as the form factor is ideal for my use case. I have a bit of a start on an Arduino-based thing in a similar size/form factor, but other stuff has been occupying my foreground.

Yah, it looked like the OffGrid team was a bit too chaotic. /sad/

Did you see the Artiphon Orba? Introducing Orba 2 by Artiphon | Artiphon

Yeah, that looks cool but would be a nightmare for my use case (both in terms of mounting as well as quick/easy access to a load of buttons).